Akamai’s security system is so strong that you can only avoid it with the right blueprint. We've looked deep into Akamai's defenses and compiled the best ways you can bypass them. In this article, you'll see the different ways to bypass Akamai and avoid getting blocked during web scraping.

- Method 1: Great rotating proxies.

- Method 2: Web scraping API (easiest and most reliable).

- Method 3: Use headless browsers.

- Method 4: Avoid honeypots.

- Method 5: Optimize HTTP headers.

- Method 6: Stealth techniques for headless browsers.

- Method 7: Don't contradict the JavaScript challenge.

What Is Akamai Bot Manager

Bot detection is a group of techniques to identify, classify, and protect websites from bot traffic. They include anti-bots and Web Application Firewalls (WAFs) like the Akamai Bot Manager.

Previous anti-bot methods involve detecting high-load IPs and checking headers. However, web scrapers have found ways around these methods, causing anti-bots to evolve into more advanced mechanisms like storing and identifying IPs and botnets and monitoring user activities with machine learning.

The Akamai Bot Manager is a security measure that blocks bots before they erode customers' trust. That covers a wide range of security actions, and web scraping scripts are among its targets.

Akamai applies AI to detect new kinds of bots from a bot directory gathered across many high-traffic sites. Like most advanced anti-bot systems, Akamai even offers a bot-scoring model for site owners to customize their blocking aggressiveness.

These security measures apply to malicious attacks, including DDoS, fake account creation, and phishing.

The two critical aspects of bot management are:

- Distinguishing humans from bots.

- Differentiating good bots from bad ones.

Not all bots are malicious. Google Crawler is an example of a good bot because it crawls and indexes content across many websites for easy searching.

How Does Akamai Bot Manager Work

As mentioned above, they use a wide variety of techniques. We'll explain some of them before we dive into the technical implementation. And learn how to bypass them, of course.

These are the moving parts to bypass Akamai Bot Manager:

- Botnets. Maintain historical data on known bots and feed their system the same range of IPs, common mistakes in user-agent naming, or similar patterns. Any of these might give away a botnet. Once identified and recorded, blocking that pattern feels safe. No humans would browse like that.

- IPs. Blocking IPs might sound like the easiest approach. It's more complex. IPs don't change ownership often, but whoever uses them does. And attackers might use IPs that belong to common ISPs, masking their origin and intent. A regular user might get that IP address a week later. By then, it should access the content without blocks.

- CAPTCHAs. The best procedure for telling humans from bots, right? Again, this is a controversial one. By using CAPTCHAs in every session, a site would drive several users away. Nobody wants to solve them for every other request. As a defensive tactic, CAPTCHAs will only appear if the traffic is suspicious.

- Browser and sensor data. Inject a JavaScript file that will watch and run some checks. Then send all the data to the server for processing. We'll see later how it's done and what data to send.

- Browser fingerprinting. As with the botnets, common structures or patterns can give you away. Scrapers might change and mask their requests. But some details (browser or hardware-related) are hard to hide. And Akamai will take advantage of that.

- Behavioral analysis. Compare historical user behavior on the sites. Checks patterns and common actions. For example, users can visit products directly from time to time. But if they never go to a category or search page, it might trigger an alert.

- TLS Fingerprinting: Akamai uses TLS (Transport Layer Security) fingerprinting to assess information (TLS handshake) from a client to prevent unwanted requests from reaching the source server. Although most web scraping tools establish a secure TLS connection, common anomalies like high request frequency from one IP, misconfigured user agents and headers, and unusual navigation behavior can flag you as a bot.

Scraping detection isn't black or white. Akamai Bot Manager combines all the above and several others. Then, based on the site's setup, it'll decide whether to block a user. Bot detection services use a mix of server-side and browser-side detection techniques.

Method #1: Great Rotating Proxies

A free proxy address will certainly not bypass Akamai. It's only a matter of time before the Akamai Bot Manager detects and blocks them.

Effective web scraping proxies have two common properties (non-exclusive):

- Rotating: They change IPs per request or after a short time.

- Origin: There's always a description of where the IPs come from. The AKamai Bot Manager will have a hard time detecting an IP from an ordinary 4G network provider. Examples are data centers or residential IPs.

Method #2: Web Scraping API

It's best to let others do the heavy lifting sometimes. ZenRows is a web scraping solution to bypass the Akamai Bot Manager or any other anti-scraping system. It automatically configures your request headers, rotates premium proxies, and uses AI to bypass any anti-bot system.

This means you can forget about all the complicated parts we discussed, focus on your data extraction logic, and scrape any website at scale without getting blocked.

Method #3: Use Headless Browsers

Akamai uses JavaScript challenges behind the scenes to differentiate between bots and humans. It's essential to bypass them during web scraping, and you'll need a headless browser to do that.

Headless browsers use a real browser like Chrome or Firefox without the graphical interface, which means they can download and run the Akamai challenge. They even mimic a legitimate user when paired with solid proxies.

Static scraping techniques using cURL, Axios in JavaScript, or Requests in Python won't work for websites that render content dynamically with JavaScript. So, besides solving JavaScript challenges, you also need a headless browser like Selenium for scraping dynamic content.

Method #4: Avoid Honeypots

Honeypots are traps that lure malicious requests and scraping bots into a controlled network where they can be monitored and analyzed. It often involves mimicking legitimate websites or using fake data to attract attackers. Akamai's security system includes honeypots to learn about attackers.

The first step in avoiding honeypots is to identify one when you see it. But that's usually not a simple task. For example, you might be in a honeypot if a website you're trying to scrape asks you to provide specific details about your computer or device.

Analyzing a website's network pattern, behavior over time, and initial response to your scraping request might also help, but not enough. Another way to evade honeypots is to mask your request with premium proxies. Also, respect a website's robots.txt rules to avoid being flagged as a scraper.

Method #5: Optimize HTTP Headers

Browsers send a set of request headers by default, so be careful when configuring them in your scraper. For instance, Akamai can quickly tell that something is wrong when you use Chrome's header information for a Firefox browser.

Ensure the whole header set is complete and accurate. Say you want to add Google as a referrer. You could use referrer: https://www.google.com/. That still requires additional backup information to make it more legitimate.

Chrome would send sec-fetch-site: none by default. But if coming from another source like Google, it will use sec-fetch-site: cross-site. These are small header details a real browser won't omit. If confused, you should stick to the standard headers for scraping.

Method 6: Stealth Techniques for Headless Browsers

We already saw how these work above. These practices consist of modifying how the browser responds to specific challenges. Akamai's detection software will query the browser, hardware, and many other things. The stealth mode overwrites some of them to avoid patterns and fingerprinting. It adds variation and masks the browser.

Some of these actions are detectable. You'll have to test and check for the right combination. Each site can have a different configuration.

The good thing is that you already understand how Akamai decides which requests to block. Or at least you know what data they gather for that.

Method 7: Don't Contradict the JavaScript Challenge

Again, it might sound obvious, but some data points might be derived from another one or duplicated. If you decide to mask one and forget the other, the Akamai bot detection will see it. And take action, or at least mark it internally.

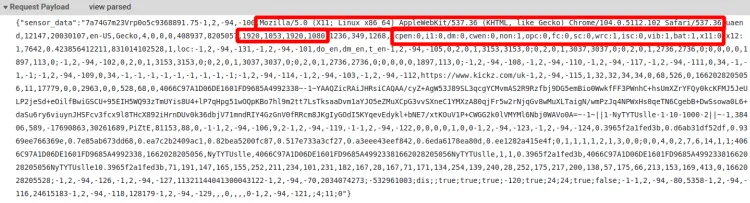

In the sensor data example image, we can see that it sends window size. Most data points are related to the actual screen and the available inner and outer sizes.

Inner, for example, should never be bigger than outer. Random values won't work here. You'd need a set of actual sizes.

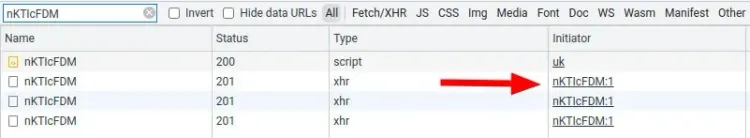

Akamai's JavaScript Challenge Explained

As we can see in the image above, the script triggers a POST request with a huge payload. Understanding this payload is crucial if we want to bypass Akamai Bot Detection. It won't be easy.

Deobfuscate The Challenge

You can download the file here. To see it live, visit KICKZ and look for the file on DevTools. You won't understand a thing, don't worry; that's the idea!

First, run the content on a JavaScript Deobfuscator. That will convert the weird characters into strings. Then, we need to replace the references to the initial array with those strings.

They don't declare variables or object keys with a straight name to make things more complicated. They use indirection: referencing that array with the corresponding index.

We haven't found an online tool that nails the replacement process. But you can do the following:

- Cut the

_acxjvariable from the generated code. - Create a file and place that variable.

- Then the rest of the code is in another variable.

- Replace (not perfect) all references to the array; see the code below.

- Review since some of them will fail.

var _acxj = ['csh', 'RealPlayer Version Plugin', 'then', /* ... */];

const code = `var _cf = _cf || [], ...`;

const result = code

.replace(/\[_acxj\[(\d+)\]\]/g, (_, i) => `.${_acxj[i]}`)

.replace(/_acxj\[(\d+)\]/g, (_, i) => JSON.stringify(_acxj[i]));

It'll need some manual adjustments since it's a clumsy attempt. A proper replacement would need more details and exceptions. Download our final version to see how it looks.

To save you time, we've done that. The original file changes frequently. This result might be different now. But it'll help you understand what data and how they send ID to the server.

Akamai's Sensor Data

You can see above the data sent for processing. We can take as examples the items highlighted in red. From its content, we can guess where the first two come from: user agent and screen size. The third one looks like a JSON object, but we can't know what it represents just by its keys. But let's find out!

The first key, cpen, is present in the script. A quick find on the file will tell us so. So, the line that references it.

var t = [],

a = window.callPhantom ? 1 : 0;

t.push(",cpen:" + a);

What does it mean? The script checks if callPhantom exists. A quick search on Google tells us that it's a feature that PhantomJS introduces, which means that sending cpen:1 is probably an alert for Akamai. No legit browser implements that function.

If you check the following lines, you'll see that they keep sending browser data. window.opera, for example, should never be true if the browser isn't Opera. Or mozInnerScreenY only exists on Firefox browsers. Do you see a pattern? No single data point is a deal breaker (well, maybe the PhantomJS one), but they reveal a lot when analyzed together!

The function called bd generates all these data points. If we look for its usage, we arrive at a line with many variables concatenated. n + "," + o + "," + m + "," + r + "," + c + "," + i + "," + b + "," + bmak.bd(). Believe it or not, but o is the screen's available height.

How can we know that? Go to the definition of the variable. Control + click or similar on an IDE will take you there.

The definition itself tells us nothing useful: o = -1. But look at a few lines below:

try {

o = window.screen ? window.screen.availHeight : -1

} catch (t) {

o = -1

}

And there you have it! You followed what and how Akamai sends browser/sensor data for backend processing.

We won't cover all the items, but you get the idea. Apply the same process for any data point you're interested in.

But the most crucial question is: why are we doing this? 🤔

To bypass Akamai's defenses, we must understand how they do it. Then, check what data they use for that. And with that knowledge, find ways to access the page without blocks.

Mask Your Sensor Data

If all your machines have similar data sent, Akamai might fingerprint it. Meaning that they detect and group them. Same browser vendor, screen size, processing times, and browser data. Is there a pattern? Check your data. They're already doing it.

Assuming that, how to avoid it? There are several ways to mask them, such as Puppeteer stealth.

And how do they do it? It's open source, and we can take a look at the evasions!

There are no evasions for availHeight, so we'll switch to hardwareConcurrency. We picked a simple one for simplicity. Most evasions are more complicated.

Let's say that all your production machines are the same. It's usual: same specs, hardware, software, etc. Their concurrency would be the same, for example, "hardwareConcurrency": 4.

It's just a drop in the ocean. But remember that Akamai Bot Manager processes hundreds of data points. We can make it harder for them by switching some.

// Somewhere in your config.

// There should be a helper function called `sample`.

const options = {hardwareConcurrency: sample([4, 6, 8, 16])};

// The evasion itself.

// Proxy navigator.hardwareConcurrency getter and return a custom value.

utils.replaceGetterWithProxy(

Object.getPrototypeOf(navigator),

'hardwareConcurrency',

utils.makeHandler().getterValue(options.hardwareConcurrency)

)

A proxy acts as an intermediary. In this case, for the function hardwareConcurrency on the object navigator. When called, instead of returning the original, it'll replace it with the one we set in the options. It can be, for example, a random number from a list of typical values.

What do we get with this approach? Akamai would see different values for hardwareConcurrency sent randomly. Assuming we do it for several parameters, it's hard to see a pattern.

Isn't this a complicated process for Akamai to run on each visit? The good part for everyone is that they do it only once. Then sets cookies to avoid running all the processes again.

Cookies to Avoid Continuous Challenges

Why is it good for you? Once obtained the cookies, the next requests should go unchecked. It means that those cookies will bypass Akamai WAF!

We suggest using the same IP to simulate an actual user session for security.

The standard cookies used by Akamai are _abck, ak_bmsc, bm_sv, and bm_mi. It's not easy to find information about these. Thanks to cookie policies, some sites list and explain them.

Notice that ak_bmsc is HTTP-only. That means that you can't access its content from JavaScript. You'll need to check the response headers on the sensor data call. You can check the headers or call document.cookie on the browser for the others.

And that cookie content is critical! The sensor call will allow or not your request and generate that cookie for your session. Once obtained, send it every time to avoid new checks.

Conclusion

It's been quite a ride! We know it went deep, but bot detection is a complex topic.

The main points to bypass Akamai bot detection or other defensive systems are:

- Good proxies with fresh and rotating IPs.

- Follow robots.txt.

- Use headless browsers with stealth mode.

- Understand Akamai's challenges so that you can adapt the evasions.

For updated info, you can check their site. It explains, not in depth, what aspects they cover and some general information. Not much, but it can guide you if anything changes.

If you liked it, you might be interested in our article about how to bypass Cloudflare.

Thanks for reading! We hope that you found this guide helpful. You can sign up for free, try ZenRows, and let us know any questions, comments, or suggestions

Frequent Questions

How to Bypass Akamai’s Rate Limit?

You can bypass Akamai’s rate limit using practices such as rotating proxies, changing HTTP headers, and stealthy headless browsers. However, these approaches are unreliable as Akamai learns from its visitors, therefore web scraping APIs like ZenRows can help you better.

What Does Akamai Do Exactly?

Akamai’s primary goal is to protect its users against threats such as DDoS attacks and malicious bot traffic. Its other services include content delivery, cloud security, edge computing, etc.

How Does Akamai Bot Manager Work?

Akamai Bot Manager identifies and blocks malicious bots, and works using various techniques: like IP blocking, browser fingerprinting, behavior analysis, sensor data analysis, CAPTCHAs, etc.

How Can You Tell If a Site Uses Akamai?

You can tell if a site uses Akamai by inspecting its source code and response headers. Look for references such as Akamai or edgekey. You can also send a request using Pragma Headers to see if the URL can be cached on Akamai.

However, not all Akamai use has apparent indicators. Some use anonymous or obfuscated scripts that may require reverse engineering.

Is Akamai a Firewall?

Yes, Akamai offers Web Application Firewall (WAF) services, including bot management and protection against DDoS attacks, cross-site scripting (XSS), SQL injection, and more.

What is AkamaiGhost?

AkamaiGhost is an edge server within the Akamai network, placed in strategic geolocations to cache content and deliver it faster to users. AkamaiGhost gathers relevant data on traffic patterns and potential threats, which Akamai collects to enhance the effectiveness of security systems like DDoS protection and WAFs across its data centers.

Did you find the content helpful? Spread the word and share it on Twitter, or LinkedIn.